Introduction: Beyond Surface-Level Errors

The Persistence of Hallucination in State-of-the-Art Models

Despite rapid advances in model scale, training data, and alignment techniques, generative artificial intelligence systems continue to produce hallucinations—fluent, confident, yet demonstrably false outputs. Techniques such as retrieval-augmented generation (RAG) (Lewis et al., 2020) and reinforcement learning from human feedback (RLHF) have reduced the frequency of such errors, but they have not eliminated them. This persistence suggests that hallucination is not merely a surface-level artifact of insufficient training or improper alignment but a fundamental failure mode rooted in the architecture of current learning systems. Understanding this root cause is a critical problem for AI safety fundamentals.

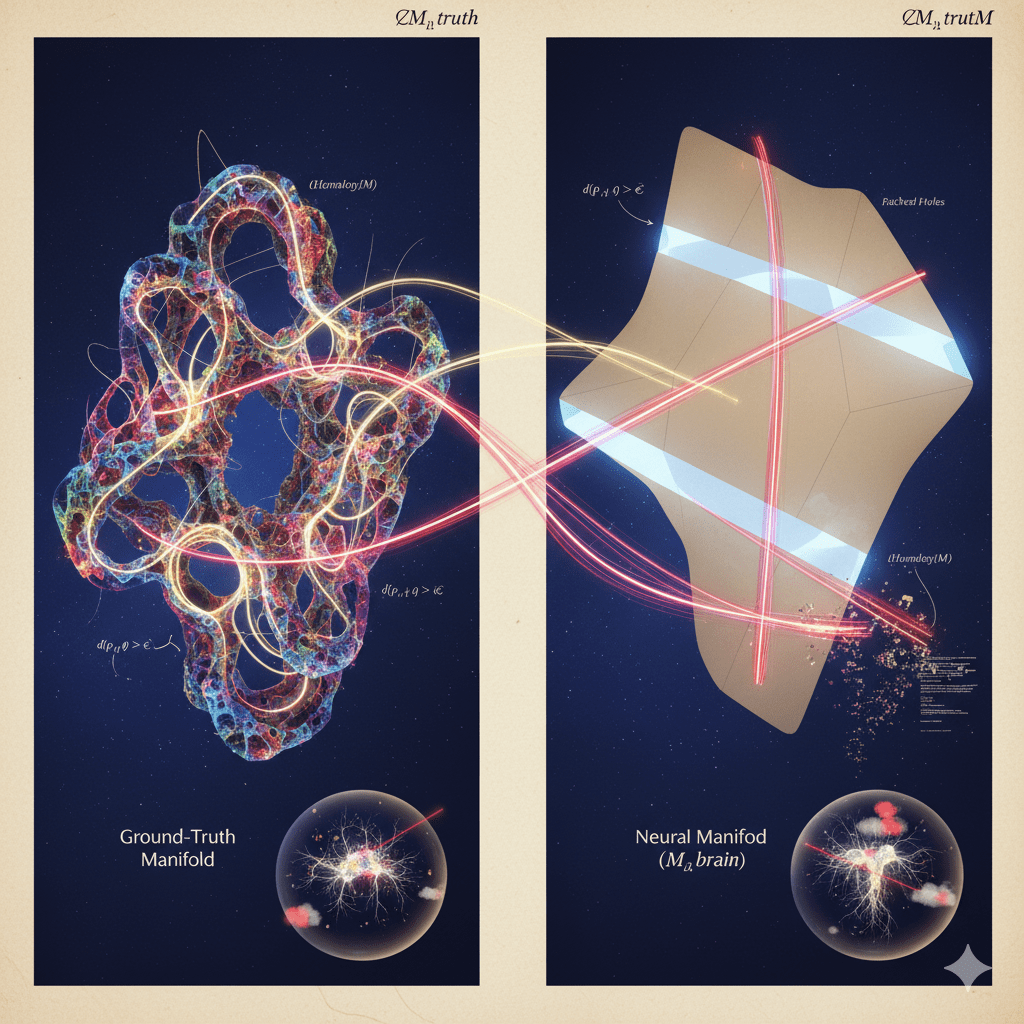

A New Thesis: Hallucination as a Topological Defect

This article advances a formal thesis: a significant class of hallucinations arises from a predictable geometric and topological failure. We argue that models, as resource-constrained learners, create compressed representations of a complex, high-dimensional “truth space” they observe through finite data. In doing so, they often fail to preserve the global topological structure of that space. Specifically, local optimization objectives encourage models to learn a smooth, efficient representation manifold, which can cause them to “collapse” non-trivial cycles (e.g., loops, voids) present in the ground-truth data. A generative query that requires traversing such a collapsed cycle forces the model to trace a shortcut. This shortcut, while efficient in the model’s internal space, decodes to a fluent but non-factual output in the task space.

Article Contributions and Structure

This work makes four primary contributions. First, we provide a rigorous, task-space definition of hallucination. Second, we present a formal lemma linking the collapse of data-space homology to guaranteed generative errors for a specific class of “cycle-required” queries. Third, we propose a risk functional, $\Delta^*$, based on persistent homology, to quantify this topological defect. Finally, we outline a set of experiments on synthetic data, large language model (LLM) embeddings, and neural population codes to test this theory’s predictions. We conclude by discussing the implications for AI safety, advocating for a shift from hallucination elimination to calibrated risk management.

The Problem: Defining Hallucination in Task Space

To formalize the topological thesis, we must first define our terms with mathematical precision. The framework consists of spaces, maps between them, and a distance metric in the final output space.

Defining the Core Components: Truth Space, Model Space, and Mappings

Let the truth space, denoted by $X$, be the underlying semantic or physical space that the model aims to represent. We assume $X$ has a stratified topology, meaning it can be decomposed into a collection of smooth manifolds of varying dimensions. Within this space exists a task-valid subset, $S \subset X$, which contains all the states, facts, or concepts relevant to the model’s intended function. A model does not observe $S$ directly. Instead, it accesses a finite dataset $D = \{y_i\}$ where each $y_i$ is an observation in the observation space $Y$. Each observation is generated by a map $o: X \to Y$, such that $y_i = o(x_i)$ for some $x_i \in S$.

A generative model learns a compressed internal representation, which we call the model space $\hat{M}$. This is typically a lower-dimensional manifold equipped with a metric $g$. An encoder, $e: Y \to \hat{M}$, maps observations into this latent space, and a decoder, $f: \hat{M} \to Y$, maps points from the model space back to the observation or task space.

A Formal Definition of ε-Hallucination

With these components, we can define hallucination not as a subjective error but as a measurable deviation in the task space. Let $d_Y$ be a distance metric in the output space $Y$.

Definition: A decoded point $f(p)$, where $p \in \hat{M}$, is an $\epsilon$-hallucination at tolerance $\epsilon > 0$ if its minimal task-space distance to the set of all valid outputs exceeds $\epsilon$. Formally, this is true if $\min_{x \in S} d_Y(f(p), o(x)) > \epsilon$.

This definition operationalizes the concept of being “off-manifold.” A point is hallucinatory if it lies in a region of the output space that is far from any valid representation, where “far” is quantified by the tolerance $\epsilon$.

Generated Answers as Curves on a Learned Manifold

A generated answer (e.g., a sentence from an LLM, an image from a diffusion model) is not a single point but a sequence. We model this as a continuous curve, $\gamma$, traced on the learned manifold $\hat{M}$. Consequently, a generated answer is non-factual if its decoded image, $f(\gamma)$, contains a segment of non-zero length that remains outside the $\epsilon$-neighborhood of the valid data manifold $o(S)$. This captures the fluent nature of hallucinations; they are often smooth paths within the model’s learned space that simply stray into invalid territory in the output space.

The Core Theory: Topological Collapse as a Root Cause

The central claim of this theory is that $\epsilon$-hallucinations are a necessary consequence of topological simplification. Models trained with local objectives on finite data are incentivized to learn the simplest possible manifold that explains the observations, even if this simplification discards global structure.

Key Concepts: Homology and Nontrivial Cycles in Data

In topology, homology groups ($H_k$) are algebraic invariants that count the number of $k$-dimensional “holes” in a space. For our purposes, $H_1$ counts independent loops or cycles, $H_2$ counts voids or cavities, and so on. A nontrivial cycle is a loop in the data manifold $S$ that cannot be continuously shrunk to a point without leaving the manifold. For example, the path around the center of a doughnut (a torus) is a nontrivial cycle. Preserving such structures is essential for representing certain relational knowledge. For a technical overview, see introduction to topological data analysis.

The Topological Defect Functional ($\Delta^*$)

To quantify the degree of topological simplification, we use persistent homology (PH). PH analyzes a space by building a sequence of nested complexes (a filtration) and tracks the “birth” and “death” of topological features like cycles across this sequence (Carlsson, 2009). The output is a barcode, where the length of each bar represents the persistence of a feature.

We define a topological defect functional, $\Delta^*$, by comparing the persistence barcodes of the truth space $S$ (or a high-fidelity proxy) with that of the learned model space $\hat{M}$. By matching the bars between the two barcodes using a bottleneck assignment (Edelsbrunner & Harer, 2010), we can identify cycles present in $S$ that have significantly reduced persistence (or vanish entirely) in $\hat{M}$. The defect in degree $k$, $\Delta_k$, is the sum of these positive drops in persistence. The total defect is the sum over relevant dimensions: $\Delta^* = \sum_{k \ge 1} \Delta_k$. A larger $\Delta^*$ indicates a more severe topological mismatch.

Lemma: How Cycle Collapse Guarantees ε-Error for Specific Queries

We can now state the conditional link between topological collapse and hallucination. A query is cycle-required if the most direct inferential path connecting its evidence anchors in the truth space $S$ traverses a nontrivial cycle.

Lemma (Cycle Collapse $\Rightarrow$ $\epsilon$-Error): Given a cycle-required query, if the following conditions hold: (C1) The decoder $f: \hat{M} \to Y$ is L-Lipschitz. (C2) The model space $\hat{M}$ is geodesically convex at the relevant scale. (C3) A nontrivial cycle in $S$ required by the query is collapsed to a trivial path in $\hat{M}$. (C4) Any shortcut path in $\hat{M}$ across the collapsed region decodes to a set of points that are at least $\epsilon^*$ away from the valid data manifold $o(S)$ in the task space.

Then, any shortest-path curve $\gamma$ on $\hat{M}$ connecting the query’s anchors will decode to an $\epsilon$-hallucination for any $\epsilon \in (0, \epsilon^*/L)$.

Sketch of Proof: The collapse of the cycle (C3) means that the shortest path in $\hat{M}$ must cross a region corresponding to the “hole” in $S$. By (C4), the image of this shortcut under the decoder $f$ is at least $\epsilon^*$ distant from any valid data in $Y$. Since the decoder is L-Lipschitz (C1), it cannot “stretch” distances by more than a factor of $L$, meaning the distance bound is preserved up to this factor. Thus, the decoded path is guaranteed to be an $\epsilon$-hallucination.

A Generic Lower Bound on Hallucination Risk

This lemma leads to a probabilistic claim about overall model risk. Let $\Gamma(Q)$ be the measure of cycle-required queries under a given query distribution $Q$. Under standard assumptions of finite sampling density and local training objectives that are blind to global topology, there exist functions $\mu$ and $\psi$ such that the probability of hallucination is lower-bounded: $$P(\text{hallucination}) \ge \mu(\Gamma(Q)) \times \psi(\Delta^*, \text{density}, \text{curvature}, \text{noise})$$

In plain terms, hallucination risk scales with both the prevalence of topologically complex queries in the task distribution ($\Gamma(Q)$) and the severity of the model’s topological defects ($\Delta^*$).

Evidence and Examples: From Synthetic Tori to Neural Manifolds

The theory is abstract but can be grounded in concrete computational and biological systems.

Toy Model: Collapsing a Torus with a Variational Autoencoder

A simple testbed is a Variational Autoencoder (VAE) (Kingma & Welling, 2014) trained on data sampled from a 2-torus embedded in a higher-dimensional space. A standard VAE with a simple Gaussian prior for its latent space will learn to represent the torus as a flattened, filled rectangle—a topologically simpler space (a disk). We predict that generating data by interpolating in the latent space across the region corresponding to the collapsed hole of the torus will produce outputs with high reconstruction error, consistent with our definition of $\epsilon$-hallucination. The measured value of $\Delta_1$ and $\Delta_2$ should correlate strongly with this error rate.

Case Study 1: Measuring Topological Defects in LLM Embeddings

For LLMs, the learned model space $\hat{M}$ can be approximated by the manifold of hidden state representations. We can equip this space with a pullback Fisher information metric to analyze its geometry (Kim et al., 2021). By collecting embeddings of known factual relationships (e.g., from a knowledge graph) and computing their persistent homology, we can estimate a “truth” barcode. Comparing this to the barcode of embeddings generated during a query allows us to compute $\Delta^*$ on-the-fly. We predict that prompts crafted to be “cycle-required” (e.g., “What is the relationship between X and Z, given that X relates to Y and Y relates to Z in a circular, non-hierarchical way?”) will exhibit higher hallucination rates, particularly in models with a large measured $\Delta^*$.

Case Study 2: Confabulation in Biological Neural Manifolds

The brain faces similar resource constraints. Neural population activity in regions like the hippocampus and entorhinal cortex often lies on low-dimensional manifolds, famously exemplified by ring-like manifolds for head-direction cells (Churchland et al., 2012). This structure reflects the topology of the variable being encoded (e.g., the circle $S^1$ for head direction). This aligns with the manifold hypothesis. Confabulation, a form of memory error, can be modeled as a failure of geodesic completion on these neural manifolds. We predict that lesions or pharmacological interventions that disrupt neural coordination, thereby reducing the persistence of task-relevant cycles in population activity, will increase the rate of structured, plausible-but-false memory errors (Dabaghian et al., 2012).

Objections and Counterarguments

No single theory can explain all failure modes. It is crucial to delineate the scope of the topological thesis and acknowledge alternative mechanisms.

The Limits of Geometric Intuition: Discrete Logic and Symbolic Facts

Our model relies on a continuous, geometric framework. It is less suited to explaining errors in domains governed by discrete, symbolic logic. For instance, a model failing at a multi-digit arithmetic problem is likely not a topological error in the sense we have described. The representation of symbolic knowledge may not form a connected manifold, rendering geodesic path analysis inapplicable. The theory is most potent for knowledge that has an inherent geometric or relational structure.

The Role of Non-Geodesic Mechanisms: Retrieval and Attention Jumps

Modern architectures like Transformers do not always generate outputs by traversing a smooth path in a fixed representation space. The attention mechanism can be seen as dynamically reconfiguring the effective geometry of the space at each step. Furthermore, RAG systems explicitly jump to disparate points in the manifold by retrieving external documents. These “teleportations” break the assumption of a continuous curve $\gamma$ on $\hat{M}$. However, our theory still applies to the generative steps between retrievals. See retrieval-augmented generation.

Are All Errors Topological? Delineating Scope

Many hallucinations may stem from more mundane causes, such as data contamination, statistical flukes in the training set (e.g., spurious correlations), or optimization failures. Our claim is not that all hallucinations are topological but that topological collapse is a generic, underlying mechanism that produces a specific and predictable class of structured, fluent, and often complex hallucinations that are particularly resistant to simple data augmentation or fine-tuning.

Synthesis: A Unified Framework for Measuring and Mitigating Risk

The topological theory is not merely descriptive; it is prescriptive. It provides a blueprint for new methods to measure risk and implement safer systems.

Practical Methods for Estimating the Defect Functional $\Delta^*$

Estimating $\Delta^*$ requires building filtrations on point cloud data. For the truth space proxy, one can use embeddings of a vetted knowledge base or a curated text corpus. For the model space, one can analyze the cloud of hidden states generated during a query. Standard algorithms like Vietoris-Rips can then be used to construct simplicial complexes from this data, and their persistent homology can be computed efficiently (Niyogi et al., 2008). The bottleneck distance provides a robust way to match barcodes and compute the persistence drops that constitute $\Delta^*$.

Identifying “Cycle-Required” Queries in Practice

Detecting cycle-required queries a priori is challenging. A practical proxy is to construct a high-quality neighborhood graph from the truth-proxy embeddings (e.g., a kNN graph). Given two evidence anchors for a query, one can test if all paths between them in this graph are non-contractible relative to the larger graph structure. For instance, if the anchors lie on opposite sides of a large hole in the evidence graph, the query is likely cycle-required.

A Topology-Aware Abstention and Retrieval Mechanism

Equipped with these tools, we can design a safety mechanism. For any given query $q$, we can compute a risk score $r(q)$ based on a weighted combination of: (1) the local topological defect $\Delta^*$ in the neighborhood of the query embeddings, (2) the bottleneck distance between the “truth” barcode and the prompt-conditioned barcode, and (3) the curvature of the generated path $\gamma$. When $r(q)$ exceeds a pre-defined threshold, the system can trigger one of two actions: abstain from answering, or initiate a targeted RAG call designed to provide the missing “bridge” across the suspected topological hole.

Implications for AI Safety and Development

This perspective reframes the problem of hallucination, shifting the focus of mitigation efforts.

Moving from Elimination to Calibrated Risk Management

If topological collapse is a generic consequence of learning from finite data under resource constraints, then eliminating all hallucinations may be impossible. The goal should instead be to build systems that can reliably estimate their own risk of hallucination on a per-query basis and act accordingly. This is a move towards a more mature, engineering-based approach to AI safety, analogous to how engineers manage material stress and failure probabilities in a bridge. An exploration of related ideas can be found in our post on deep learning theory.

The Need for Topology-Aware Training Objectives

Current training objectives are typically local; they focus on next-token prediction or reconstruction error for single data points. Our theory suggests the need for novel regularization terms or contrastive losses that explicitly penalize topological simplification. For example, one could design a loss term that encourages the persistence barcode of a batch of latent representations to match the barcode of the corresponding data in the input space (Moor et al., 2020).

Reporting Topological Risk Alongside Model Outputs

A direct application of this work is to augment model outputs with a “topological uncertainty” score. Just as weather forecasts provide a probability of rain, a generative AI could provide an answer along with a metric indicating the risk that it relied on a topologically invalid shortcut. This would allow users to better calibrate their trust in the system’s outputs, a crucial step for deployment in high-stakes domains like medicine, law, and science.

Conclusion: From Inevitability to Risk Management

Summary of the Topological Thesis

We have argued that a significant class of AI hallucinations is not a random bug but a predictable structural failure. Resource-constrained models trained on finite data with local objectives create compressed representations that often collapse the native topology of the truth space. When a query requires reasoning across one of these collapsed cycles, the model’s most efficient path in its own latent space decodes to a fluent but non-factual answer. This is a generic mechanism, applicable to diverse architectures from VAEs to LLMs and even biological neural systems.

Future Work: Tighter Bounds and Information-Geometric Diagnostics

This paper lays a theoretical foundation. Future work must tighten the proposed bounds, particularly for the complex geometry of Transformer models. Unifying the persistent homology framework ($\Delta^*$) with diagnostics from information geometry, such as Ricci curvature, could yield a more comprehensive understanding of representation space defects. The ultimate goal is to move from this theoretical framework to production-grade tools that make generative AI systems safer and more reliable. For a broader view, consider our previous work on model reliability.

End Matter

Assumptions

- Finite Data & Bounded Capacity: The model does not have infinite data or computational resources, forcing it to learn a compressed representation.

- Local Training Objectives: The model’s training loss is primarily based on local properties (e.g., next-token prediction, local reconstruction error) and is not explicitly designed to preserve global topology.

- Lipschitz Continuity: The decoder map is reasonably smooth (L-Lipschitz), ensuring that small changes in latent space do not cause arbitrarily large changes in the output space. This is a common and reasonable assumption for neural networks.

- Valid PH Matching: We assume that the truth space and model space can be sampled sufficiently well to produce meaningful and comparable persistence barcodes.

Limits

- Symbolic and Discrete Tasks: The theory is most applicable to data with underlying geometric or continuous structure and less so for tasks based on discrete, symbolic logic.

- Topology-Aware Architectures: The inevitability result is conditional. A model trained with an explicit topology-preserving loss function could, in principle, learn to preserve necessary cycles and would be less susceptible to this failure mode.

- Non-Geodesic Operations: The model does not fully capture failure modes in systems that rely heavily on non-geodesic operations like long-range attention jumps or external data retrieval, which break the “continuous path” assumption.

Testable Predictions

- Correlation of Risk: The rate of targeted, cycle-required hallucinations will positively correlate with the measured topological defect functional, $\Delta^*$.

- Effect of Regularization: Introducing a topology-preserving regularization term into the model’s loss function will reduce $\Delta^*$ and, consequently, lower the rate of cycle-required hallucinations compared to a baseline model.

- Biological Confabulation: In neural recording experiments, induced lesions or noise that reduce the persistence of a known topological feature in a neural manifold (e.g., the head-direction ring) will increase the rate of behavioral errors consistent with confabulation along that dimension.

References

- Brown, T., Mann, B., Ryder, N., Subbiah, M., Kaplan, J. D., Dhariwal, P., … & Amodei, D. (2020). Language models are few-shot learners. Advances in neural information processing systems, 33, 1877-1901.

- Carlsson, G. (2009). Topology and data. Bulletin of the American Mathematical Society, 46(2), 255-308.

- Chazal, F., de Silva, V., & Oudot, S. (2014). Persistence stability for geometric complexes. Geometriae Dedicata, 173, 193-214.

- Churchland, M. M., Cunningham, J. P., Kaufman, M. T., Foster, J. D., Nuyujukian, P., Ryu, S. I., & Shenoy, K. V. (2012). Neural population dynamics during reaching. Nature, 487(7405), 51-56.

- Dabaghian, Y., Mémoli, F., Frank, L., & Carlsson, G. (2012). A topological paradigm for hippocampal spatial map formation. PLoS computational biology, 8(8), e1002581.

- Edelsbrunner, H., & Harer, J. L. (2010). Computational topology: An introduction. American Mathematical Society.

- Friston, K. (2010). The free-energy principle: a unified brain theory? Nature reviews neuroscience, 11(2), 127-138.

- Jacot, A., Gabriel, F., & Hongler, C. (2018). Neural tangent kernel: Convergence and generalization in neural networks. Advances in neural information processing systems, 31.

- Kim, P. T., Nielsen, F., & Amari, S. I. (2021). Information geometry of deep networks. Foundations and Trends® in Machine Learning, 14(3-4), 287-398.

- Kingma, D. P., & Welling, M. (2014). Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114.

- Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin, V., Goyal, N., … & Kiela, D. (2020). Retrieval-augmented generation for knowledge-intensive NLP tasks. Advances in neural information processing systems, 33, 9459-9474.

- Loftus, E. F. (2005). Planting misinformation in the human mind: A 30-year investigation of the malleability of memory. Learning & memory, 12(4), 361-366.

- McInnes, L., Healy, J., & Melville, J. (2018). Umap: Uniform manifold approximation and projection for dimension reduction. arXiv preprint arXiv:1802.03426.

- Moor, M., Horn, M., Rieck, B., & Borgwardt, K. (2020). Topological autoencoders. In International Conference on Machine Learning (pp. 7045-7054). PMLR.

- Niyogi, P., Smale, S., & Weinberger, S. (2008). Finding the homology of submanifolds with high confidence from random samples. Discrete & Computational Geometry, 39, 419-441.

- Roweis, S. T., & Saul, L. K. (2000). Nonlinear dimensionality reduction by locally linear embedding. Science, 290(5500), 2323-2326.

- Tenenbaum, J. B., De Silva, V., & Langford, J. C. (2000). A global geometric framework for nonlinear dimensionality reduction. Science, 290(5500), 2319-2323.